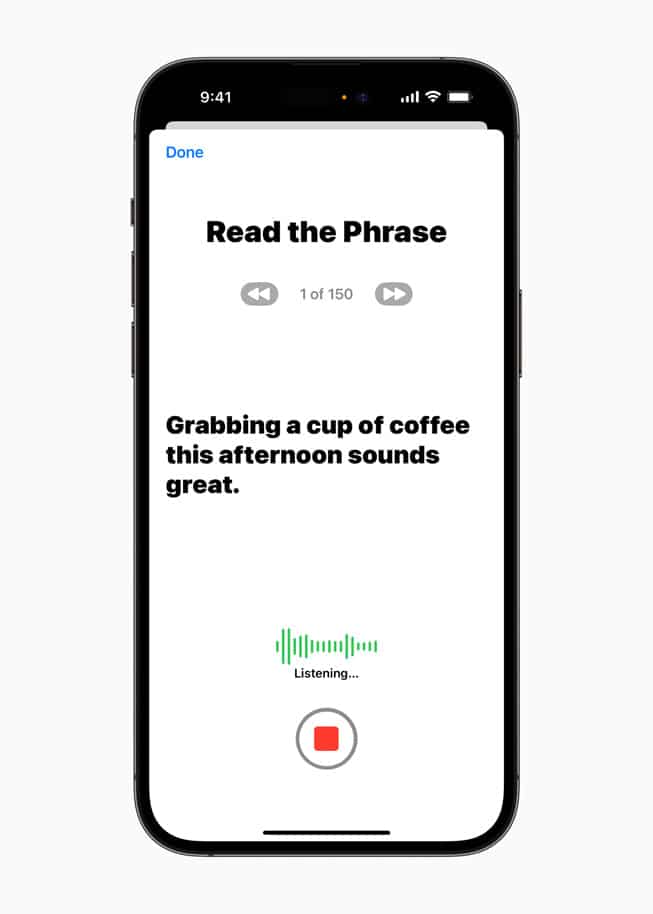

Apple has developed new accessibility features for iPhones and iPads that enable them to speak for you (Live Speech), the ability to speak on your behalf in your own voice (Personal Voice), and to identify objects in front of you using Point And Speak. The Live Speech feature, which is useful to people who either cannot speak or are at risk of losing their ability to speak. It is also useful if someone has a throat illness that makes it painful to speak or threatens their vocal capabilities (such as ALS).

The Live Speech feature could speak for you during meetings if you are having vocal problems (or if you’d rather type than speak, like many people). The Point And Speak feature scans text on nearby objects and reads them out, which is useful to people with vision impairment.

Apple says that the Live Speech technology is powered by on-device machine learning to protect your data (because it won’t have to pass through Apple’s servers), and being on-device, offline should result in consistent response times/reliability despite the speed or status of your Internet connection.

Related Articles

Xbox Cloud Gaming Coming To iPhones, iPads and PCs This Week

Apple Devices Can Now Patch Security Flaws Between OS Updates